The "Type" test of transformers is based on the standards established by the International Electrotechnical Commission (IEC) under IEC 60076. IEC 60076 is the main international standard series for transformers, covering the design, manufacturing, and testing requirements for transformers. The type test is typically conducted before the transformer leaves the factory to verify that its design and manufacturing comply with the standard requirements and technical specifications, ensuring performance and safety.

"Type" tests generally include the following aspects:

The rated load test verifies the transformer's operating capability under rated conditions, including rated current and power factor. The process involves running the transformer at rated load for a period of time (usually 1-2 hours). During the test, parameters such as temperature, current, voltage, and losses are monitored in real time.

For example, assume a transformer with a rated capacity of 1000 kVA, rated voltage of 10kV/0.4kV, and rated frequency of 50Hz, with a design rated load of 1000 kVA.

Test procedure example:

Load selection: Select a load of 1000 kVA using a simulated load bank or load equipment. The transformer is connected to the load, ensuring the input voltage is 10 kV and the output voltage is 0.4 kV. The transformer is run for 1-2 hours while recording temperatures at various parts of the transformer (such as oil temperature, winding temperature, etc.).

Losses and Efficiency: Measure the transformer’s copper losses (losses caused by current) and core losses (losses caused by changing magnetic fields). Assuming the transformer’s efficiency is calculated to be 98%, which meets the standard requirements.

The temperature rise test involves running the transformer at rated load for a specific period of time and measuring the temperature rise in various parts of the transformer to ensure that it will not overheat during long-term operation. Temperature rise is typically the most critical testing parameter. Excessive temperature rise could indicate design flaws or excessive load, which could affect the long-term reliability of the transformer.

For example, after running the transformer at rated load for 1 hour, the oil temperature increases by 40°C, and the winding temperature increases by 50°C. If these temperature rises are below the standard limits (the transformer winding temperature rise must not exceed 65°C, and the oil temperature rise must not exceed 55°C), then the temperature rise test is considered to be passed.

The insulation resistance test is used to measure the resistance of the transformer’s insulation system (such as between windings and ground, and between windings themselves) to ensure that the insulation is capable of effectively isolating electrical currents and preventing leakage or electrical faults.

During the test, a DC voltage is applied to various electrical parts of the transformer (depending on the transformer’s rated voltage, typically 500V, 1000V, or 2500V), and the current passing through the insulation material is measured. The insulation resistance is then calculated. The higher the insulation resistance, the better the insulation performance, and the lower the leakage current.

Insulation resistance is typically expressed in megaohms (MΩ). If the insulation resistance value is too low (usually less than 1 MΩ or 0.5 MΩ), this indicates poor insulation performance. According to IEC 60076, for transformers with a rated voltage of 10 kV or lower, the insulation resistance must be greater than 1 MΩ; for transformers with a rated voltage above 10 kV, the insulation resistance must be greater than 5 MΩ.

The partial discharge test is used to detect whether partial discharge phenomena occur in a transformer under high voltage, in order to prevent potential insulation damage during long-term operation. Partial discharges typically occur at parts of the transformer such as the high-voltage windings, terminals, joints, and insulation materials.

The partial discharge test is usually conducted in a standardized high-voltage laboratory where the environment simulates the actual operating conditions of the transformer, including temperature and humidity. The partial discharge detection device is connected to the high-voltage windings and related electrical contacts of the transformer (the role of the probe is to detect if partial discharge occurs on the electrical equipment). The transformer is then subjected to the standard operating voltage, typically at rated voltage or above rated voltage (e.g., 1.5 times or 2 times the rated voltage) to observe the performance of the insulation system under high electric field conditions.

The partial discharge detection device monitors discharge signals from parts such as the windings, joints, and insulation materials. The test personnel need to carefully observe the results displayed on the detector and record parameters such as the waveform, frequency, and amplitude of the partial discharge signals. If the amplitude of the discharge signal is large or the duration is long, this indicates a significant defect in the insulation material, which may require repair or replacement. Conversely, if the discharge signal is weak and stable, it indicates that the transformer’s insulation system is performing well and is suitable for continued use. According to the IEC 60076 standard or other industry standards, the partial discharge level of the transformer under test voltage must be below the specified limit. Typically, the permissible partial discharge level should be below 10 pC (picoCoulombs), and there should be no continuous discharge phenomenon.

The short-circuit test is performed by artificially short-circuiting either the low-voltage or high-voltage side of the transformer (typically the low-voltage side) to simulate short-circuit conditions. Rated current or voltage is applied to measure the short-circuit impedance, losses, and temperature rise characteristics of the transformer. This test provides data on the transformer’s short-circuit impedance, load losses, and other performance parameters to ensure that the transformer does not experience excessive electrical stress or temperature rise during operation.

For example, consider an oil-immersed transformer with a rated voltage of 110/10 kV. The process for conducting the short-circuit test is as follows:

The no-load test is conducted with the low-voltage side of the transformer open (i.e., no load connected) and rated voltage applied to the high-voltage side. During this test, parameters such as current, power loss, and magnetic flux are measured to evaluate the transformer’s no-load loss, no-load current, and magnetic flux characteristics. The no-load test is primarily used to verify the transformer's performance under no-load conditions, including the core’s magnetic flux characteristics and core losses, and to determine the no-load current, no-load loss, and core losses.

For example, consider an oil-immersed transformer with a rated voltage of 110/10 kV. The process for conducting the no-load test is as follows:

The load test involves connecting the low-voltage side of the transformer to a specified load and applying rated voltage to the high-voltage side of the transformer to operate it under load conditions. The purpose of the test is to measure and evaluate parameters such as temperature rise, losses, efficiency, and load current under load conditions, ensuring the safety, performance, and energy efficiency of the transformer when operating under load.

For example, consider an oil-immersed transformer with a rated capacity of 500 kVA. The load test procedure is as follows:

The high potential test, also known as the hi-pot test, involves applying a high voltage (usually 1.5 to 2 times the rated voltage of the transformer) to assess the insulation strength of the transformer’s insulation system. This test ensures that the transformer can safely operate under both normal working voltage and abnormal high voltages (such as lightning overvoltage or switching surges).

For example, consider an oil-immersed transformer with a rated capacity of 500 kVA. The procedure for conducting the high potential test is as follows:

Test Voltage Selection: The test voltage is set to 1.5 times the rated voltage, i.e., 750 V (if the transformer’s rated voltage is 500 V).

Voltage Application: The voltage is gradually increased from zero to 750 V and held for one minute while observing for any current leakage. Leakage current is recorded during the test. During this process, it is essential to ensure that there is no current leakage or flashover on both the high-voltage and low-voltage sides of the transformer.

Insulation Resistance Measurement: The insulation resistance is measured to ensure it exceeds the specified value (e.g., greater than 10 MΩ), ensuring the transformer can operate safely under high voltage conditions.

Visual Inspection: The transformer is visually inspected to confirm there is no overheating, partial discharge, or abnormal sounds. After the test, the voltage is gradually decreased to zero, and the test data is recorded.

If no breakdown or abnormal phenomena occur during the test, the transformer passes the high potential test.

What are the Acceptance Testing Items for Transformer Installation, Commissioning and Repair?

What tests Must be done(FAT) before a transformer leaves the factory?

What is the difference between transformer "Type testing"and "Factory acceptance testing"(FAT)?

What is the "Type Test" of Transformer( with example analysis)?

Kingrun Transformer Instrument Co.,Ltd.

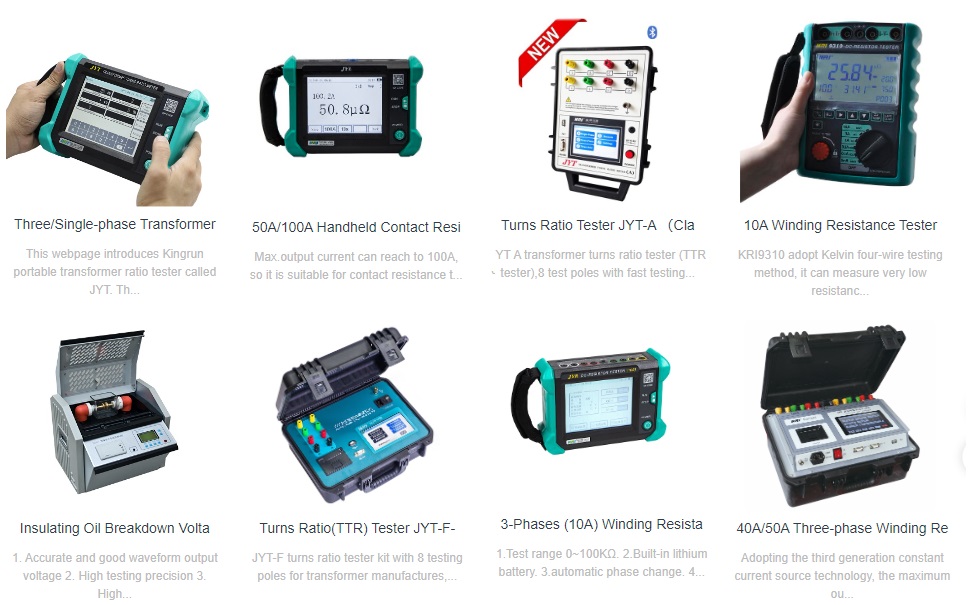

More Transformer Testers from Kingrun